Most biomechanics research takes place in laboratory settings, in part because conventional approaches, such as optical motion capture, require reflective markers and substantial calibration to effectively measure human movement. This makes them ineffective for working in the field.

Noel Perkins, Donald T. Greenwood Collegiate Professor and Arthur F. Thurnau Professor in Mechanical Engineering, is helping researchers break free of that constraint.

In a three-year research project sponsored by the U.S. Army, Perkins and his team are developing an automated measurement system to quantify the physical performance of individuals as they move through an outdoor obstacle course.

The Army uses performance on such courses to understand whether soldiers are adequately trained for the situations and environments they may face and how their performance is impacted by the gear they carry.

“If you only look at gait speed and how long it takes to complete a particular obstacle to assess performance, you miss a lot of the biomechanical movements that are responsible for it,” Perkins said.

Instead, researchers in his laboratory, with colleagues in Professor Leia Stirling’s laboratory at the Massachusetts Institute of Technology, are using measurement techniques based on wireless inertial measurement units, or IMUs.

The small, low-cost, lightweight sensors contain accelerometers, gyroscopes and magnetometers and eliminate many experimental constraints, since they don’t require external reference points for dead reckoning.

“If you put these on the body, you can now measure the motion of all major body segments simultaneously and outside a lab,” Perkins said.

For example, Research Investigator Dr. Stephen Cain is working to quantify soldier performance on balance beam, window and wall obstacles. In the case of the balance beam, performance assessment traditionally has consisted of two metrics: time to move across the beam and whether the individual did so without falling.

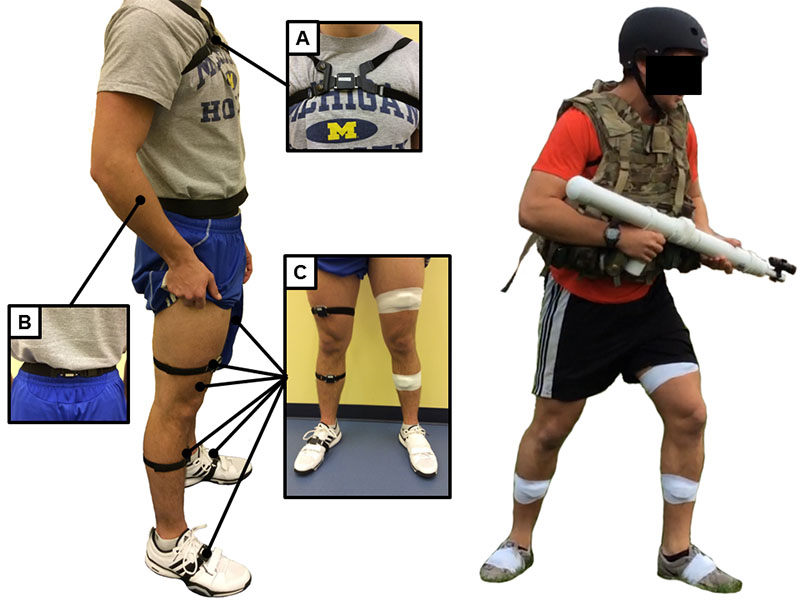

Cain is using more than a dozen sensors attached to the body, helmet and a mock rifle. Early analysis employed data from the feet, pelvis and torso – a choice based both on data reliability and observation after video analysis revealed that study participants primarily used step placement and left and right body lean to correct their balance.

From the torso sensor data, he determined how far subjects leaned, the frequency, duration and whether leaning occurred while moving quickly or slowly. While many performance metrics can be derived from the measured data, the most predictive metric of balance performance was the variability of the size of balance corrections that a participant used when crossing the beam.

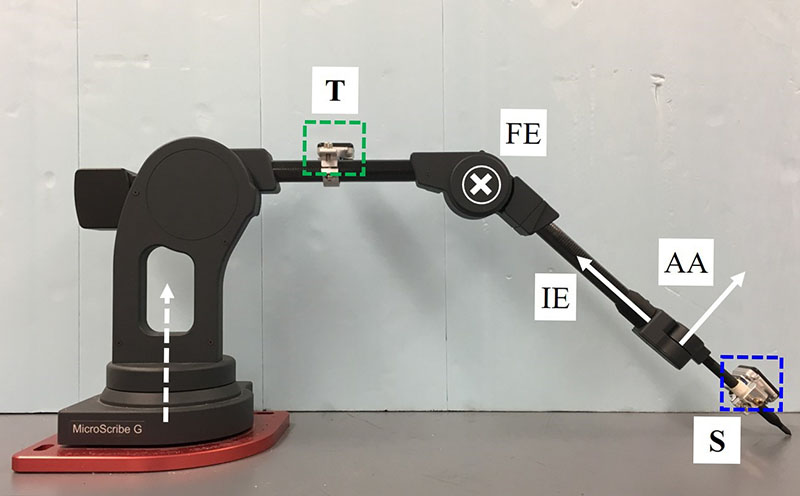

Doctoral candidate Rachel Vitali is focusing on using IMU data to estimate three-dimensional rotations across the human knee joint. She does so by utilizing data collected simultaneously from two strategically placed IMUs: one attached to the thigh and one attached to the shank.

Measurements from these IMUs ultimately is fused to reconstruct the orientation of the shank relative to the thigh, which in turn provides the three rotation angles across the knee, namely flexion/extension, abduction/adduction, and internal/external rotation. The key to this advance is exploiting the constraint that the knee acts largely as a hinge joint during many types of identifiable motions.

In benchmarking experiments using a coordinate measurement machine, Vitali has proven her method. “Our next step is to extend the work to encompass the subtle complexities of a human knee,” she said.

Michael Potter, PhD pre-candidate, looks at running and sprinting performance with IMU data from sensors on participants’ feet. To estimate the full trajectory of each foot, the IMU data is integrated forward in time but doing so also introduces errors from sensor drift. To overcome drift, Potter has developed algorithms that use zero-velocity updates (ZUPTs), which reduce drift by assuming the foot is stationary, at least briefly, during the stance phase of each stride.

The technique has proven accurate for walking, but little research has validated it for running. Potter first observed large differences between estimates of total distance run and known distances. He conducted tests to determine the source of the discrepancies, and early results point to sensor limitations, particularly due to lower accelerometer ranges and/or sampling rates.

“Now we’re working on a larger study so we can better understand the sensor requirements for accurate results at various speeds,” he said.

Assistant Research Scientist Lauro Ojeda is quantifying performance of running on stairs. Research using optical motion capture has relied on observations of very few steps. “Unfortunately, you can’t get to a steady state, nor can you study this in the field.”

Ojeda’s data, collected as participants run up and down a full flight, has shown that when ascending, individuals tend to run. That is, they have both feet airborne during one phase of their stride. On the descent, however, data showed many instances of a double-support phase, during which both feet supported the body.

“As we suspected, it’s easier for people to run up stairs than down,” said Ojeda, who also found that, across the study population, individuals’ speed between steps was fairly constant. “Whether you’re generally a slower runner or faster runner, your speed is limited by the next step. So if you speed up, you may overshoot the step; if you slow down, you may undershoot.”

In the end, the comprehensive automated system will include wearable technology, algorithms and a tablet-based app with at-a-glance metrics. “With this technology,” Perkins said, “we can identify fine-grain movements and develop metrics that really capture the essence of performance.”